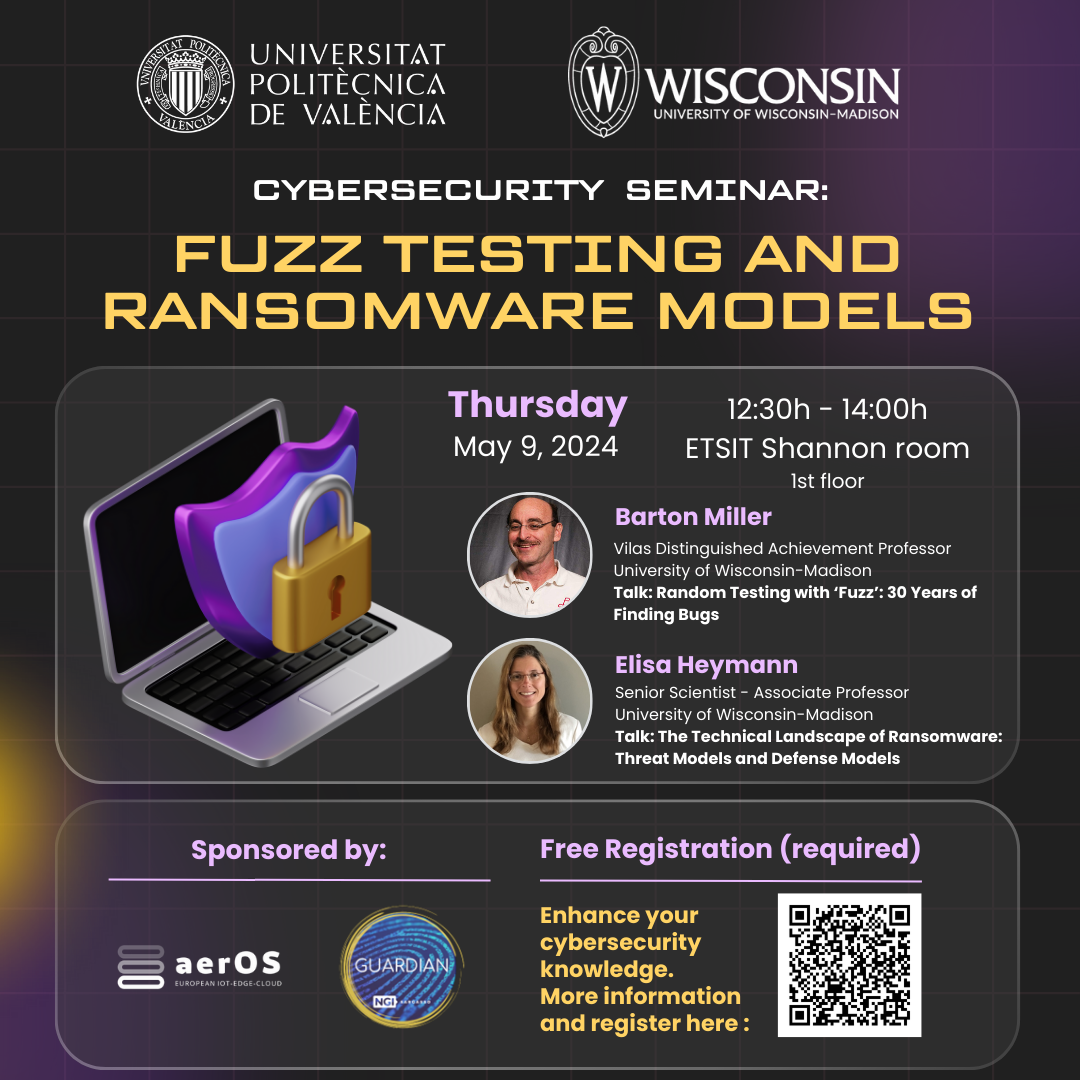

Next Thursday 9 May at 12:30h we will have the honour of welcoming Barton P. Miller and Elisa Heymann, distinguished professors from the University of Wisconsin, who will give two interesting talks on cybersecurity.

Venue of the event: 4D Building. 1st Floor. Shannon room. Universitat Politècnica de València.

Please confirm your attendance via the following link

Barton P. Miller’s Talk: Random Testing with ‘Fuzz’: 30 Years of Finding Bugs

Fuzz testing has passed its 30th birthday and, in that time, has gone from a disparaged and mocked technique to one that is the foundation of many efforts in software engineering and testing. The key idea behind fuzz testing is using random input and having an extremely simple test oracle that only looks for crashes or hangs in the program. Importantly, in all our studies, all our tools, test data, and results were made public so that others could reproduce the work. In addition, we located the cause of each failure that we caused and identified the common causes of such failures.

In the last several years, there has been a huge amount of progress and new developments in fuzz testing. Hundreds of papers have been published on the subject and dozens of PhD dissertations have been produced. In this talk, I will review the progress over the last 30 years describing our simple approach – using what is now called black box generational testing – and show how it is still relevant and effective today.

In 1990, we published the results of a study of the reliability of standard UNIX application/utility programs. This study showed that by using simple (almost simplistic) random testing techniques, we could crash or hang 25-33% of these utility programs. In 1995, we repeated and significantly extended this study using the same basic techniques: subjecting programs to random input streams. This study also included X-Window applications and servers. A distressingly large number of UNIX applications still crashed with our tests. X-window applications were at least as unreliable as command-line applications.

The commercial versions of UNIX fared slightly better than in 1990, but the biggest surprise was that Linux and GNU applications were significantly more reliable than the commercial versions.

In 2000, we took another stab at random testing, this time testing applications running on Microsoft Windows. Given valid random mouse and keyboard input streams, we could crash or hang 45% (NT) to 64% (Win2K) of these applications.

In 2006, we continued the study, looking at both command-line and GUI-based applications on the relatively new Mac OS X operating system. While the command-line tests had a reasonable 7% failure rate, the GUI-based applications, from a variety of vendors, had a distressing 73% failure rate.

Recently, we decided to revisit our basic techniques on commonly used UNIX systems. We were interested to see that these techniques were still effective and useful.

In this talk, I will discuss our testing techniques and then present the various test results in more details. These results include, in many cases, identification of the bugs and the coding practices that caused the bugs. In several cases, these bugs introduced issues relating to system security. The talk will conclude with some philosophical musings on the current state of software development.

Papers on the four studies (1990, 1995, 2000, 2006, and 2020), the software and the bug reports can be found at the UW fuzz home page:

http://www.cs.wisc.edu/~bart/fuzz/

Elisa Heymann’s Talk:The Technical Landscape of Ransomware: Threat Models and Defense Models

Ransomware has become a global problem. Given the reality that ransomware will eventually strike your system, we focus on recovery and not on prevention. The assumption is that the attacker did enter the system and rendered it inoperative to some extent.

We start by presenting the broad landscape of how ransomware can affect a computer system, suggesting how the IT manager, system designer, and operator might prepare to recover from such an attack.

We show the ways in which ransomware can (and sometimes cannot) attack each component of the systems. For each attack scenario, we describe how the system might be subverted, the ransom act, the impact on operations, difficulty of accomplishing the attack, the cost to recover, the ease of detection of the attack, and frequency in which the attack is found in the wild (if at all). We also describe strategies that could be used to recover from these attacks.

Some of the ransomware scenarios that we describe reflect attacks that are common and well understood. Many of these scenarios have active attacks in the wild. Other scenarios are less common and do not appear to have any active attacks. In many ways, these less common scenarios are the most interesting ones as they pose an opportunity to build defenses ahead of attacks.

This work represents our best understanding of the current threats and attacks. We actively solicit feedback and contributions to make this document more accurate, complete, and timely.